Hi

I was wondering if there is a way to backup all the files?

Thank you so much!

Hi

I was wondering if there is a way to backup all the files?

Thank you so much!

You can manually download all template, layout, pages and asset files via FTP.

Database items however, need to be exported via their modules.

Automating FTP connections would need to be done externally via your FTP client or other software.

Proper, built-in automated back-up is planned for Treepl CMS but not until version 7. So probably a little way off still.

Hi Adam,

In ‘Pages’ can we backup the ‘SEO’ tab components in any way at all?

ie. SEO Tab has: Meta Title, Meta Desc, Head, Canonical, OG, Amp.

Can’t seem to do this by FTP and doesn’t seem to be any form of export.

Not via FTP no, as this is data stored in the CMS.

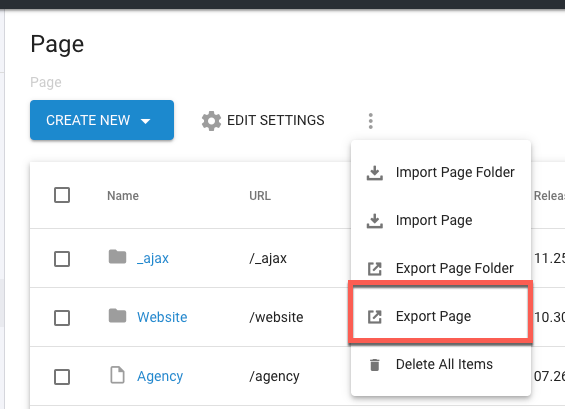

But there is an export feature for Pages which includes this data:

If anybody is looking for an automated FTP backup solution I’ve been trying to get Microsoft Flow to backup directly to dropbox, but I can’t crack it. I think i’t supposed to be able to.

This would give me versioning, but wouldn’t capture modularized data.

Thanks Adam,

If I put it in list view (instead of tree) I can Export all ‘pages’ with a single click, which is great.

Folders, however seem to require I visit every folder on the site and Export that folder’s ‘contained folders’.

Can I be doing this a better way?

Hi @Reagan_Vautier. Looks like you can only export a single view at a time and not the whole structure (with nested items, etc.)

I’ve found a way to export ALL page folders in one export (including sub folders), but again, it’s only as a flat view. There is no references to how they are structured in the directory overall (no parent IDs or even URL).

any update on backing up an entire site?

Hey @Jerry_Dever

@alex mentioned this as a specific example flow that they are considering. I’m not sure if that means it’s coming up soon, but it’s definitely on their mind. I’ll let the Treepl team comment as to where that is in their priority queue right now.

One thing to note is that that this is in the public backlog but it has not votes from partners. I know it is an important feature, but I think people have other priorities for their few votes. Backups don’t get (most) clients unfortunately, ecommerce/integration features do.

The good news is that there is a page roll back feature that is in the top voted items, which will address the “somebody messed it up” case. My understanding is that once the masterplan is complete, then Treepl will focus on items in the backlog based on votes, so hopefully it will be developed soon.

In the mean time I’ve kind of jerry-rigged a solution. It’s definitely not perfect, but it does capture most of the data I need to go back and restore for the last 30/180 days. I’ve documented it in this forum post. . I still would love to see a backup option that directly deposits all website files and module/database data into a dropbox (or similar) folder. It would give me good piece of mind.

Now that I 've got a few sites on Treepl … I would really like to see this feature move up the list. I’m ready to vote for this feature but it seems I can’t yet as it needs to be reviewed by treepl ?

I think that that the datacentres are being backed up in an automated manner behind the scenes at least daily, and we can use a support request to have our sites be reverted to a backup point date/time. Can Treepl team please confirm this is the case?

I’m currently using the Treepl Portal’s Duplicate Site feature as a backup mechanism. I duplicate site to a different datacentre to where the live site lives. Note there are a limited number of trial sites and therefore backup points that you can make this way. Note also You’ll want to disable analytics tracking and ensure you stop search engine crawlability on the duplicate/backup sites. I find this method fast / easy / efficient.